Vision & NLP Projects

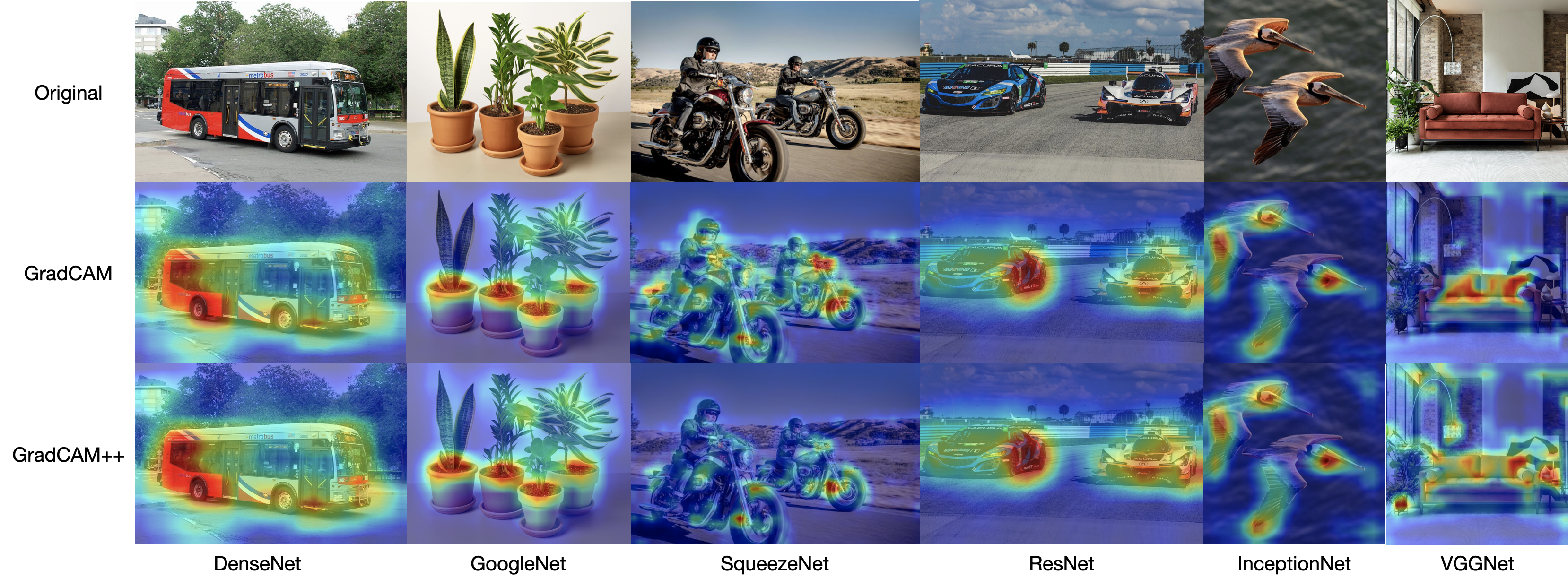

Interpretability in Image Classification Techniques

This is my independent research trying to answer the question how does image classification models work and how can we interpret them.

How do computer vision algorithms work internally? How can we interpret their inner workings? These are some of the questions which many researchers are trying to answer lately. While, there are various algorithms like ResNets, GoogleNet, VGGNet, DenseNets, etc and many more CNN architectures that exist, we often don’t know how do they work internally and what kind of intuition do these models build in the intermediate layers before classifying a particular image. This work is an independent study I pursued under the guidance of Professor Nik Brown, where I tried to interpret these models.

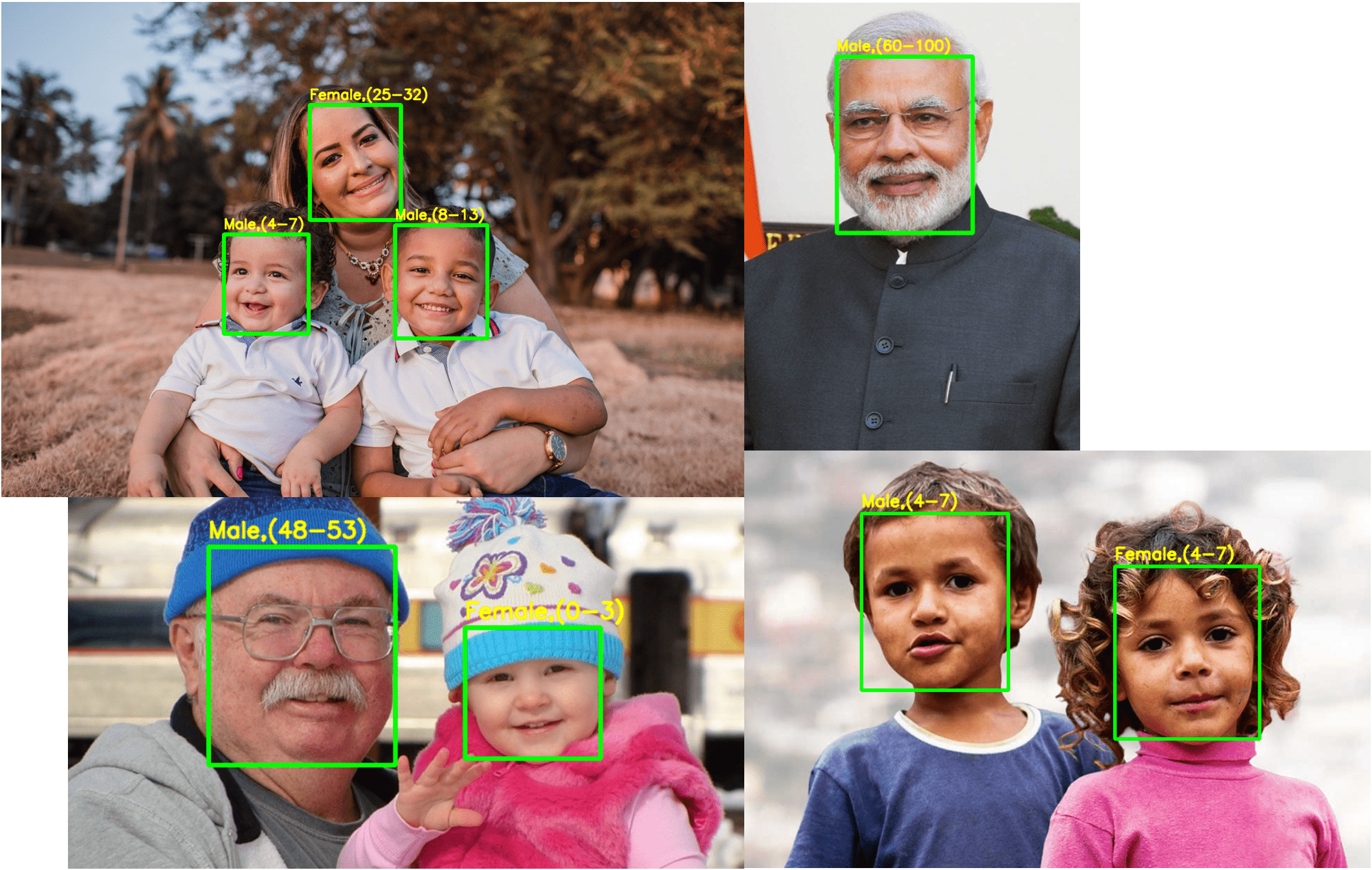

Gender and Age Prediction from Face Images

Contains source code to infer age and gender of a person given an image of their face. Gender and Age Prediction is a fairly challenging task. Determining whether a person is a Male or a Female is a simple

binary classification task. However, determining the age of a person can be pretty challenging. It is difficult to get

an estimate of a person’s age just by their looks. Humans are surprisingly deceiving and this project is aimed to tackle

this challenge of predicting human age and gender from face images.

Gender and Age Prediction is a fairly challenging task. Determining whether a person is a Male or a Female is a simple

binary classification task. However, determining the age of a person can be pretty challenging. It is difficult to get

an estimate of a person’s age just by their looks. Humans are surprisingly deceiving and this project is aimed to tackle

this challenge of predicting human age and gender from face images.

Text-to-Image Metamorphosis using AttnGANs

Contains source code to generate synthetic bird images from a given text input Text-to-Image Metamorphosis is the translation of a text to an Image. Essentially, it is inverse of Image Captioning.

In Image Captioning, given an image, we develop a model to generate a caption for it based on the underlying scene.

Text-to-Image Metamorphosis generates an image from a corresponding text by understanding the language semantics.

In this project we have developed images of Birds given a caption describing the properties of the bird.

Text-to-Image Metamorphosis is the translation of a text to an Image. Essentially, it is inverse of Image Captioning.

In Image Captioning, given an image, we develop a model to generate a caption for it based on the underlying scene.

Text-to-Image Metamorphosis generates an image from a corresponding text by understanding the language semantics.

In this project we have developed images of Birds given a caption describing the properties of the bird.

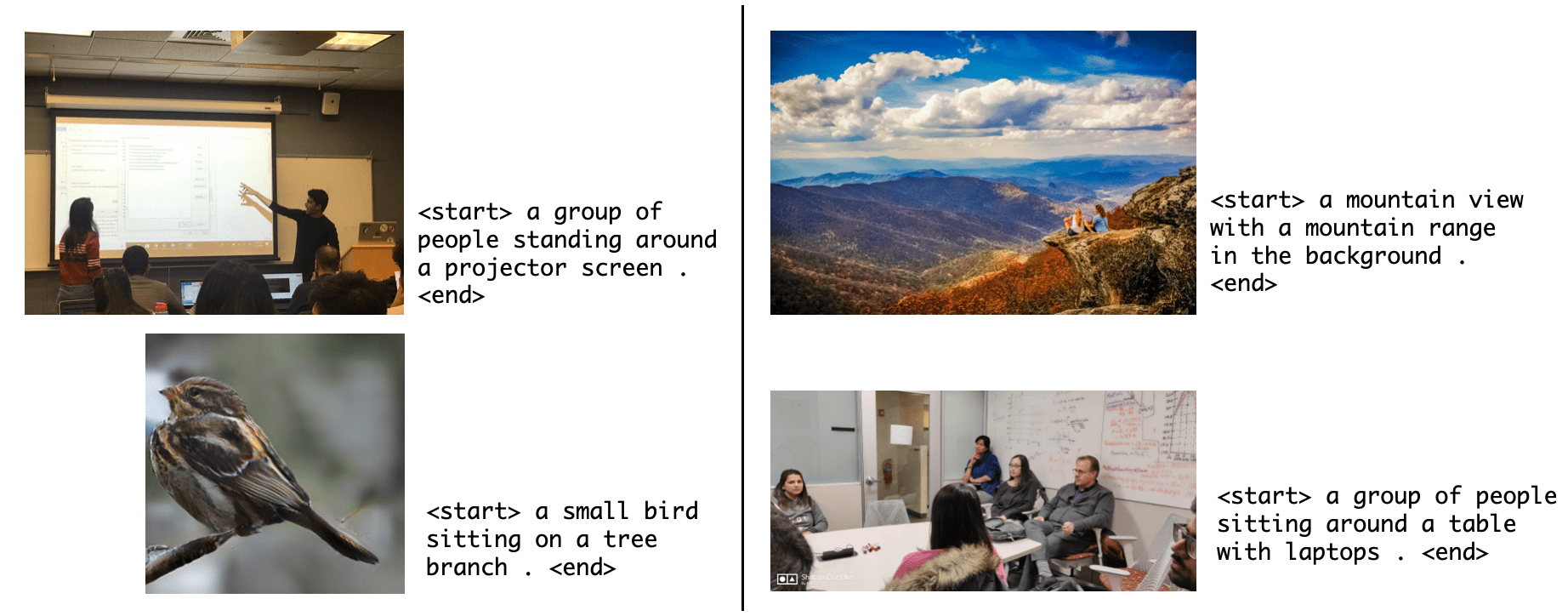

Image Captioning using COCO Dataset

Source code to train a model on COCO dataset to caption images with descriptive texts What does a particular scene in an image mean? Given an image it is easy for humans to describe the scene and various

elements in it, but it is a fairly challenging problem for a computer to do that and yet generalize it. This project is

an effort to explore image captioning and understand how images are captioned with descriptive texts by training a

CNN-LSTM hybrid network.

What does a particular scene in an image mean? Given an image it is easy for humans to describe the scene and various

elements in it, but it is a fairly challenging problem for a computer to do that and yet generalize it. This project is

an effort to explore image captioning and understand how images are captioned with descriptive texts by training a

CNN-LSTM hybrid network.

Mouse Brain MRI Image Classification with Data Parallelism

Source code to acquire and auto-stitch brain MRI images distributed across the servers and classify using pretrained modelsMouse Brain MRI scans are used in medical research to understand brain activity as a result of testing a new drug before public release. The brain scans are done after slicing the mouse brain along 3 planes - horizontal, sagittal and coronal. Given a set of mouse brain MRI scans after cut along the 3 planes, we want to identify the class of an MRI scan given an image. This project acquires high resolution (5000x5000) histopathology images chunked into smaller bits across the servers and auto-stitches them to form an image with a specified magnification level. These images are then scaled to 227x227 to be further used for inference using pretrained ResNet-50, Inception-ResNet and ResNext-50 models and the models are trained using transfer learning with IMAGENET weights as initializers.

American Sign Language (ASL) detection using CNNs

Source code to detect and decipher American Sign Languages.We are privileged as humans to talk and converse and communicate with each other. But what about those people who can’t talk or speak? Such people find it hard to convey their ideas. This project is an effort to develop a system to help people who cannot speak better to communicate with individuals. We identify all the 26 English alphabets along with 3 special characters - space, delete, and blanks. The goal is to generate a real-time system to translate signs on the fly.

Text Generation using Long-Short Term Networks

Contains source code to generate sequence of text from model trained on large text corpusTraditional Neural Networks or deep learning models, in general, cannot store information over successive layers (i.e these architectures lack memory component over time). However, LSTMs can remember relationships between instances and we can exploit this property to generate new sequences from a model trained on large sequence-based data. In this project given a large textual data of essays written by Paul Graham (a computer scientist), we generate new sequences by understanding the underlying semantics using LSTMs